AI Collaborates to Solve Complex Problems: Sakana AI's Challenge and Human-Like Trial and Error

I'm Tak@. I work as a system integrator, and in my free time, I enjoy developing web services using generative AI.

This time, I'd like to explore with you the cutting-edge technology that allows AI to collaborate and solve complex problems, much like humans do.

Learning from Human Wisdom: Why is AI "Collaboration" Gaining Attention Now?

Humans have overcome major problems that couldn't be solved alone by collaborating with each other. Historic achievements like the Apollo program, the birth of the internet, and the Human Genome Project were never accomplished by a single genius.

People with diverse expertise and perspectives came together, sometimes clashing, sometimes merging their ideas, to break through countless technical barriers. This is truly the power of "collective intelligence," as the old proverb "Two heads are better than one" (or more literally, "If three people gather, they can solve a problem that even Manjushri, the Bodhisattva of wisdom, cannot solve") suggests.

I believe this human wisdom applies equally to the world of AI.

Cutting-edge AI models like ChatGPT, Gemini, and DeepSeek are evolving at an astonishing pace, but each has a "personality" stemming from its unique training data and methods.

Some models excel at programming, others at creative writing, and still others at executing a series of tasks in sequence. Their strengths and weaknesses vary widely.

I see these "personalities" or "biases" in AI not as mere limitations, but rather as valuable resources for generating "collective intelligence."

Just like a "dream team" of diverse experts coming together to solve complex problems, AIs, by pooling their respective strengths and collaborating, should be able to tackle issues that are impossible for a single entity to resolve.

Expanding AI's "Thinking Power": The Concept of Inference-Time Scaling

What do you do when you face a difficult problem?

Most of the time, you probably "think deeply for a long time," "experiment by doing," or "collaborate with others." The idea of applying these human problem-solving methods to AI led to the concept of "inference-time scaling."

Until now, improving AI performance was thought to be primarily about how much computational power was used during model training (training-time scaling).

However, in recent years, it's become clear that even after a model has been trained, its performance can be significantly improved by investing more computational resources during inference.

This is equivalent to a human "thinking longer" about a given problem. For example, "inference models" like OpenAI's o1/o3 and DeepSeek's R1 dramatically boost their capabilities by generating longer chains of thought.

But it's not just about making AI "think longer."

When we tackle complex programming, we often write code, run it, find and fix bugs, and sometimes even restart from scratch—a process of "trial and error." Can't we make AI do this efficiently?

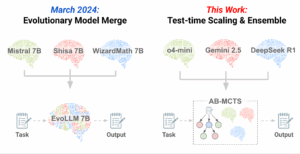

Furthermore, can't multiple AIs "collaborate" by leveraging their respective strengths? Sakana AI's newly announced algorithm, "AB-MCTS," is pursuing the possibilities of inference-time scaling from these two directions: "trial and error" and "collaboration."

AI's Smart "Trial and Error": How AB-MCTS Works

AB-MCTS (Adaptive Branching Monte Carlo Tree Search) is an inference-time scaling technique designed to enable efficient "trial and error" in LLMs (Large Language Models).

There have been two main simple approaches to trial and error so far.

One is a "deep dive" exploration method called "sequential refinement."

This approach involves having an LLM generate an answer and then iteratively refining it. However, if the initial answer is fundamentally flawed, no amount of refinement will lead to a good solution.

The other is a "breadth-first" exploration method called "iterative sampling."

This involves posing the same question to an LLM multiple times to generate many different answers. While seemingly inefficient, it has been reported to show high performance on many benchmarks by eliciting diverse outputs from the LLM. However, while effective, this method cannot improve the imperfect solution itself.

Previously, it was known that both "deep diving" and "broad searching" were effective for finding better answers with LLMs, but there was no way to combine them effectively.

That's where AB-MCTS comes in. It allows for flexible exploration in both "deep dive" and "broad search" directions, adapting to the problem and situation.

AB-MCTS is an extension of Monte Carlo Tree Search (MCTS), which was also used in AlphaGo.

MCTS typically has a fixed search breadth, but AB-MCTS removes this constraint, designed to maximize the virtually infinite diversity of LLM outputs.

Specifically, at each stage (node) of the search, it intelligently decides whether to pursue a "broad search" (generating completely new solutions) or a "deep dive" (refining existing promising solutions) using a probabilistic method called "Thompson Sampling."

As a result, it can find better answers than existing methods, even when using the same computational resources.

It's as if AI is replicating the flexible human thought process, where sometimes we say, "Okay, let's try a new approach!" to broaden our ideas, and at other times we say, "Let's refine this idea a bit more" to delve deeper.

AIs Pooling Their "Wisdom": The Advent of Multi-LLM AB-MCTS

While it's impressive that AB-MCTS allows AI to intelligently engage in trial and error, Sakana AI's challenge goes even further with "Multi-LLM AB-MCTS," where multiple different LLMs collaborate.

As mentioned, cutting-edge LLMs each have different areas of expertise. One AI might be good at overall strategy, while another excels at writing specific code.

If we can get these AIs with different "personalities" to "collaborate" so their strengths are maximized, rather than just pooling them, then problems that a single model couldn't solve should become solvable by the AIs' "collective intelligence."

Multi-LLM AB-MCTS adds a new choice: "which LLM to use," on top of the existing AB-MCTS mechanism. During exploration, in addition to choosing whether to "generate" new solutions (Go Wider) or "refine" existing ones (Go Deeper), it adaptively selects "which LLM to use."

This question of "which LLM to choose" is similar to a problem known in machine learning as the "multi-armed bandit problem."

This is a challenge where you have a slot machine with several arms (choices), and you try to figure out which one to pull to get the biggest reward through experimentation.

In Multi-LLM AB-MCTS, as the exploration progresses, performance data for each LLM accumulates. Based on this, it uses Thompson Sampling, a probabilistic model, to determine "which LLM is most likely to produce the best answer in this situation."

In other words, it starts by using various LLMs in a balanced way, then intelligently focuses its usage on the LLMs that prove more promising.

Astonishing Results on the Challenging "ARC-AGI-2" Benchmark

To test the power of Multi-LLM AB-MCTS, Sakana AI conducted an experiment on the extremely difficult "ARC-AGI-2" benchmark.

ARC-AGI (Abstraction and Reasoning Corpus) was created to evaluate human-like flexible reasoning and efficient problem-solving abilities, unlike traditional AI evaluations that test general knowledge or specific skills.

Even ARC-AGI-1 was a major challenge for AI, but this time, the even more difficult ARC-AGI-2 was chosen.

In the experiment, a budget of up to 250 LLM calls (computational resources) was set, and the models were tasked with generating inference rules as Python code.

The generated code was evaluated (rewarded) based on how many demonstration cases it correctly solved. Finally, performance was measured by the "Pass@k" metric, which measures whether at least one correct solution was found within k attempts, focusing on the exploration capability itself.

The results were truly astonishing.

- AB-MCTS Surpasses the Limits of Iterative Sampling: Iterative sampling (broad exploration) using a single model, o4-mini, solved 23% of the problems. However, by using AB-MCTS, the success rate increased to 27.5%. The superiority of AB-MCTS became particularly clear after more than 50 LLM calls.

- "Collective Intelligence" Paves the Way: Then, Multi-LLM AB-MCTS showed its true value. By combining multiple cutting-edge models—o4-mini, Gemini-2.5-Pro, and DeepSeek-R1-0528—it ultimately succeeded in finding correct solutions for over 30% of the problems.

- Fusion of "Personalities": Interestingly, DeepSeek-R1-0528 did not show particularly high performance on its own, yet when incorporated into Multi-LLM AB-MCTS, it efficiently increased the number of problems that could be solved. This clearly demonstrates the power of "collective intelligence," where understanding and complementing the characteristics of each LLM, rather than just using the best performing one, improves overall performance.

What's particularly impressive is the instance where problems that no single LLM could solve were resolved through the collaboration of multiple LLMs.

For example, in one problem, the initial solution generated by o4-mini was incorrect, but DeepSeek-R1-0528 and Gemini-2.5-Pro used it as a hint and arrived at the correct solution in the next step.

This shows that Multi-LLM AB-MCTS brings a flexible collaborative system to AI, allowing them to learn from each other's failures and reinforce each other, much like a human team.

Tak@'s Perspective: The Future of AI Where Technology and Creativity Intersect

As a system integrator, I've been involved in an incredibly wide range of system developments, from factory production management systems to complex medical school timetabling software, large-scale core system replacements, and library systems that handle physical media.

Through these experiences, I feel I've honed my ability to understand the core of systems and apply technology.

At the same time, I started developing web services as a hobby more than 15 years ago, and with the conviction that "generative AI is the ultimate mashup tool!", I'm passionate about creating tools that bring everyday "Wouldn't it be nice if…" ideas to life.

I felt that Sakana AI's newly announced AB-MCTS, especially Multi-LLM AB-MCTS, is precisely where my experience and passion for the infinite possibilities of generative AI intersect.

Adding a new perspective of "collaboration" to the world of AI development, which has often focused on competing individual AI model capabilities, feels like a breath of fresh air.

The tools I'm developing, such as the "AI Learning Planner", "AI Business Automation Proposal Service", and "AI Programmer", also aim to "assist human creative activities" by leveraging AI's power.

By not just using the unique capabilities of individual AIs in isolation, but by linking multiple AIs and having them collaborate like a human team, the possibilities for solving even more complex and creative challenges expand.

Our Relationship with AI Going Forward

Sakana AI's research suggests that there's still enormous, unexplored potential in AI inference-time scaling.

While "inference models," which gained attention around mid-2024, have boosted AI capabilities by "thinking longer," this AB-MCTS introduces new directions: "trial and error" and "collective intelligence."

Of course, there are still challenges. For example, the "Pass@k" evaluation metric used in this experiment assesses exploration capability itself and differs from the "Pass@2" (choosing the correct answer from two final candidates) often required in actual competitions.

To bridge this gap, further research is needed, such as more sophisticated final answer selection algorithms and "LLM-as-a-Judge," which uses LLMs themselves as evaluators.

Also, the relationship with other AI collaboration methods like Multiagent Debate, Mixture-of-Agents, and LE-MCTS is an area that needs deeper exploration in future research.

However, precisely because of these challenges, I believe the future of AI will be even more exciting.

If AI becomes not just a high-performance computer, but an entity that "learns through trial and error" and "collaborates" with each other, much like us humans, it will have an immeasurable impact on our lives and work.

Sakana AI plans to continue applying principles inspired by nature, such as "evolution" and "collective intelligence," to AI development.

I hope to help you "turn your ideas into reality" using technology and creativity. I'm truly looking forward to seeing what "previously impossible things" will be achieved now that AI has gained the new power of "collaboration," both as a developer and an AI user.