Ending AI's "Wild West"? The Future Indicated by Stability AI's Policy Revision

Hello, I'm Tak@, a systems integrator. Are you aware of a recent piece of news that sent shockwaves through the AI industry?

It was an event that put an unprecedented "halt" to the free development and use of AI models.

In just a few days, what we once took for granted – the unbridled creation of AI content – suddenly became subject to strict new rules.

The New Ripple Effect: Stability AI's Policy Revision – What's Changing?

July 31, 2025: A New Boundary for AI "Freedom"

On July 31, 2025, Stability AI, the developer behind the image generation AI "Stable Diffusion," will implement new terms of use. This revision is drawing significant attention, particularly for its comprehensive prohibition of "generating sexual content."

Acceptable Use Policy — Stability AI

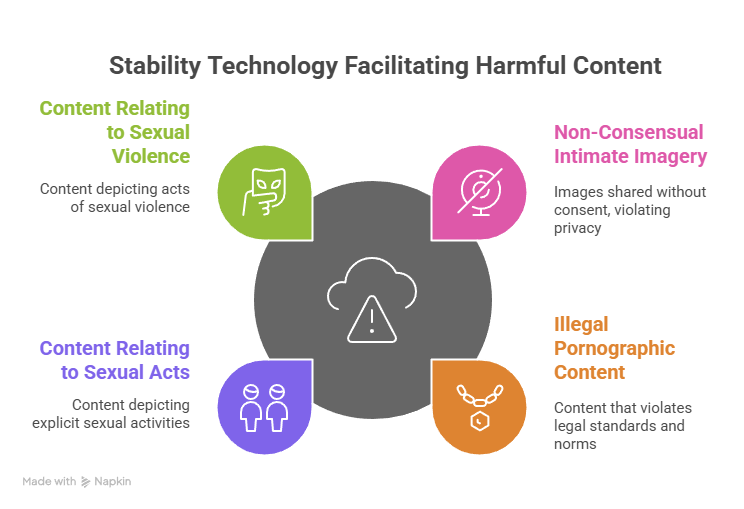

Specifically prohibited content includes non-consensual intimate images (NCII), illegal pornographic content, content related to sexual acts or sexual violence, and child sexual abuse material (CSAM).

While illegal content was already forbidden under previous terms, this revision now explicitly restricts "sexual content itself."

The scope of these new terms is very broad, applying to all "Stability AI Technology," including Stability AI's models, products, services, APIs, software, and other materials.

This also covers usage in "local environments" where you run Stable Diffusion code and "weights" (model data on your own machine, as well as use through third-party platforms. The policy clearly states that violations could result in severe measures, such as account suspension or freezing, and even reporting to relevant authorities.

When I first heard this news, I was once again struck by the profound impact of AI's evolution on society. It felt like AI, which until now had pursued "what's possible" from a technical standpoint, was now strongly confronted with "what shouldn't be done."

Does "Local Use is Fine" Still Apply? The Complex Relationship Between Licenses and Terms of Use

In response to this policy revision, a particularly active debate among users revolves around whether running models on a local computer (a "local environment") would be exempt.

This stems from the fact that previously released Stable Diffusion models, such as "Stable Diffusion 1.5" and "Stable Diffusion XL (SDXL)," were provided under "irrevocable" licenses.

This concept suggests that once something is released, usage restrictions cannot be added later. There are also arguments that technical regulation is difficult for locally used models, as they are hard to track.

Indeed, if you use models released before July 31 and they were provided under an irrevocable license, you might assume that content generation, for both personal and commercial use, would remain unrestricted, as before.

In fact, it's predicted that for AI-powered merchandise sales and doujinshi (fan-made publications) creation, as long as models pre-dating SDXL are used, existing commercial use rights will be maintained, and no legal issues will arise.

However, in its new terms, Stability AI clearly states, "By using Stability AI Technology, you agree to this policy." This can be interpreted to mean that even if you use older models, your future usage itself might become subject to the new terms.

If the content generated is deemed problematic, you might not be able to simply say, "It's an old model, so it's fine."

Furthermore, cloud services like "Google Colab" already prohibit adult content generation and have implemented NSFW (Not Safe For Work) content filtering.

After July 31, with dual restrictions from both Stability AI and Google, an even stricter vetting system is expected. This suggests that not only technical aspects but also the "intentions" of platforms and "external pressures" could influence model distribution and access.

I believe this situation indicates that, when using AI technology, we have entered an era where we are required to take greater responsibility for "who uses the technology, and with what intent" – the act of usage itself – not just the technical aspects.

Towards the Era of "Responsible AI": A Legal and Ethical Paradigm Shift

AI-Generated Content and Copyright: The Unseen "Gray Zone"

The evolution of AI also poses new challenges regarding copyright. Generative AI creates new content by learning from vast amounts of data, much of which includes copyrighted works.

In Japan, under Article 30-4 of the Copyright Act, the use of copyrighted works for AI development (learning purposes) is permitted without authorization under certain conditions. However, this is not unlimited, and there's a view that use solely for imitation or reproduction should be distinguished.

Furthermore, different countries have varying approaches to the copyright of AI-generated content itself.

In Japan, "human creativity" is generally a requirement for copyright protection, so content entirely generated autonomously by AI is typically not protected. However, if human creative input is added, or if the instructions given to the AI (prompts) show creativity, protection may be possible.

Similarly, in the U.S., detailed and creative prompts may be eligible for copyright protection.

It feels like a new challenge of the digital age that a single prompt can inadvertently expose one to the risk of copyright infringement. Giving AI instructions that mimic the style of a specific artist can lead to copyright infringement, and there's a growing number of cases involving criticism and legal action.

"Simulated Child Pornography" Highlights the Limits of Legal Systems

The Stability AI policy revision is rooted in the serious social problem of a surge in AI-generated child sexual abuse material (CSAM). This issue highlights new legal challenges brought about by AI.

Until now, Japan's Child Pornography Prohibition Law has primarily targeted content depicting "the actual figures of children," meaning AI-generated "simulated child pornography" has generally been exempt from punishment.

Cases where the face is that of an actual child but the body is someone else's or AI-generated, making identification difficult, are common, and judgment is not easy.

This issue isn't so much a qualitative difference from simulated content created by traditional "morphing" or "collage" techniques, but rather a quantitative factor where AI has made it "incredibly easy, inexpensive, and possible to create a large volume of high-quality content," making the problem more severe.

The current situation, where technology is advancing faster than legal frameworks can keep up, is akin to the challenges of managing uncertainty and risks in project management. In the AI world, this "uncertainty" manifests as a gap between technological progress and legal systems.

While attempts are being made to address this with existing laws like defamation and insult, judging the quality of generated images and whether they lead to a "decrease in social reputation" is difficult, and adequate responses may not always be possible.

As a more fundamental issue, discussions centered on the infringement of personal rights, such as the "right to identity of self-image," will become increasingly important in the future.

The Wave of Global Regulation and the Need for "Responsible AI"

In response to these legal and ethical challenges surrounding AI, regulatory movements are accelerating worldwide. In Europe, the "EU AI Act," the world's first comprehensive AI regulatory law, was enacted in May 2024, and in South Korea, Asia's first AI law was passed in December 2024.

These laws aim to manage the risks of AI development and use, and to build trustworthy AI systems.

Particularly noteworthy is the concept of "Responsible AI." This refers to a set of guidelines for considering the societal impact and aligning with ethical principles in the design, development, deployment, and use of AI. Specifically, "pillars of trust" such as "explainability" (understanding AI's decision-making), "fairness" (absence of bias), "robustness" (ability to handle unusual situations), "privacy" (protection of personal information), and "transparency" (understanding AI's mechanisms) are advocated.

For companies and individuals to safely utilize AI, it is essential not to simply "use it because we can," but to understand these Responsible AI principles and integrate them into their activities. I believe this is an unavoidable path for balancing AI technology development with a healthy society.

How We Should Engage with AI: A Proposal from an SIer

Immediate "Risk Mitigation Measures" for Businesses

The Stability AI policy revision and the legal developments surrounding AI present a good opportunity for businesses and sole proprietors to rethink how they use AI. As an SIer, I propose the following risk mitigation measures:

- Establish and Implement an Internal AI Usage Policy

- Clearly define rules on which AI tools to use, how to handle internal data, and to what extent AI-generated content can be released externally.

- Regular training and information sharing are crucial to enhance employee literacy.

- Thorough AI Prompt Creation and Management

- Avoid instructions that mimic the style of specific artists. Also, be cautious with expressions like "in the style of" or "__-like" if they refer to well-known works.

- Document prompts in detail and save records to serve as "evidence" in case of unforeseen issues.

- Establish an AI-Generated Content Review Process

- Don't use AI-generated content as is; always have a human review it to check for similarities with existing copyrighted works.

- If necessary, seek external opinions, such as from legal experts.

- Scrutinize AI Vendor Contracts and Terms of Use

- Carefully read the terms of service for any AI service you use. Confirm if commercial use is permitted, how copyright of generated output is handled, and how responsibilities are divided.

- Depending on the company's size and intended use, standard terms may be insufficient, so consider negotiating individual contracts.

- Copyright Education and Risk Awareness Cultivation

- AI technology is constantly evolving, and legal frameworks are changing. Continuous learning of the latest information and acquiring accurate knowledge about copyright and AI is required of all stakeholders.

The Balance Between AI's "Freedom of Design" and "Social Responsibility"

The recent Stability AI policy revision once again highlighted the deep chasm between "technological freedom" and "ethical control." Open-source AI models like Stable Diffusion, by their nature, can structurally "circumvent" restrictions even if companies impose terms.

Indeed, there's a possibility of "forked models" emerging that don't comply with the terms, and models continuing to be distributed on "anonymous forums" or "Torrent sites" where technical regulation is difficult.

This confronts us with the reality that "terms can indicate what's right, but they cannot be a complete deterrent." The stricter regulations become, the more likely users may deviate from legitimate corporate channels towards more free (but risky) usage.

I believe this change is a crucial turning point as AI evolves from being merely a tool to becoming a social infrastructure.

It feels to me that we are being challenged with more fundamental questions of "social design," not just technical aspects, such as "what kind of expressions are socially acceptable" and "who should decide that."

However, precisely because of this situation, positive developments are also emerging. For instance, new AI models and developers like "Flux Schnell" and "Black Forest Labs" are offering more liberal terms of use. This suggests that restrictions can, conversely, encourage technological development and diversification, fostering a healthy competitive environment.

Finally: How Will You Engage with AI?

The Stability AI policy revision was an event that made us reconsider how deeply this new technology, AI, impacts our society and values. We must confront the question not just of "what AI can do," but "what AI should be like in society."

AI is already deeply integrated into our lives and work. It's a current that won't stop. That's why we must not simply accept AI's evolution but instead think more carefully and proactively about "how we will use it" – the "design of freedom."

What constitutes ethical behavior? What is freedom that isn't oppressive? The lines are in the hands of those who develop the technology, those who provide the services, and each one of us who uses it.

In this significant change, how will you engage with AI, and what kind of society do you want to build?